Communia Association, Link (CC-0)

Today, on the verge of the implementation deadline for the CDSM directive, the European Commission has published its long awaited guidance on the application of Article 17 of the Directive, in the form of a Communication from the Commission to the European Parliament and the Council. The structure of the final guidance largely follows the outline of the Commission’s targeted consultation on the guidance from July 2020, but there are significant changes to the substance of the final document. The final version of the guidance makes it clear that the European Commission has completely undermined the position it held before the CJEU, that Article 17 is compatible with fundamental rights as long as only manifestly infringing content can be blocked.

In the final guidance, the Commission maintains that it is “not enough for the transposition and application of Article 17(7) to restore legitimate content ex post under Article 17(9), once it has been removed or disabled” and argues that only manifestly infringing content should be blocked automatically, but these “principles” are included in name only. By introducing the ability for rightholders to “earmark” any content that has the potential to ”cause significant economic harm”, the guidance allows rightholders to easily override these principles, whenever they see fit, and to force platforms to automatically block user uploads even if they are not manifestly infringing.

In the remainder of this post we will examine these last minute changes to the guidance in more detail. Before we do that, we will briefly recall the key problem that the guidance was supposed to resolve and the principles that underpinned previous versions of the guidance.

The key problem that the guidance tries to resolve:

To comply with Article 17(4) platforms will need to use automated content recognition technology to filter out uploads containing works that rightholders identified for blocking. To comply with Article 17(7) of the directive platforms have to ensure that uses of works that do not infringe copyright must not be prevented as a result of the cooperation between rightholders and platforms. Automated content recognition technology can reliably tell when a work identified by rightholders is contained in an upload (which makes it very well suited to comply with the requirements contained in 17(4)), but it cannot tell if the individual use is legitimate or not (which makes it unsuited to comply with the requirements of Article 17(7)).

This means that any use of automated content recognition technology to comply with Article 17(4) will result in overblocking of legitimate uploads, which in turn will result in an infringement of fundamental rights protected by the EU Charter of Fundamental Rights. (This is why the Polish case challenge against Article 17 alleges that the article violates fundamental rights). This mechanism is amplified by the fact that platforms face liability for copyright infringement if they do not comply with their requirements under 17(4), while they do not face comparable risks when they do not comply with their requirements under Article 17(7). For the platforms this results in an incentive to err on the side of removal which results in the blocking of legitimate uploads.

Article 17(10) of the Directive required the Commission to organize a stakeholder dialogues “and taking into account the results of the stakeholder dialogues, issue guidance on the application of this Article, in particular regarding the cooperation referred to in paragraph 4. When discussing best practices, special account shall be taken, among other things, of the need to balance fundamental rights and of the use of exceptions and limitations.”

Principles for the application of automated content recognition technologies in implementations of article 17

The stakeholder dialogue took place between October 2019 and February 2020. Based on the input received during the stakeholder dialogue, the Commission held a public consultation in the Summer of 2020, where it presented principles that should be addressed by the guidance. These principles showed that the Commission had clearly understood the issues at stake and that it was willing to issue guidance that contained protections for users’ fundamental rights. More specifically the Commission proposed that the guidance should state that:

- Member States should transpose into their national legislation the mandatory exceptions for quotation, criticism, review and uses for the purpose of caricature, parody or pastiche from the second part of Article 17(7);

- Member States should explicitly transpose into their national legislations the first part of Article 17(7) and that the objective of national implementations “should be to ensure that legitimate content is not blocked when technologies are applied by online content-sharing service providers under Article 17(4) letter (b) and the second part of letter (c)“;

- Therefore it “is is not enough for the transposition and application of Article 17 (7) to only restore legitimate content ex post, once it has been blocked” and that when “service providers apply automated content recognition technologies under Article 17(4) [..] legitimate uses should also be considered at the upload of content“;

- To achieve this objective “in practice, automated blocking of content identified by the rightholders should be limited to likely infringing uploads, whereas content, which is likely to be legitimate, should not be subjected to automated blocking and should be available.“

- When a platform does block likely infringing uploads “users should still be able to contest the blocking under the redress mechanism provided for in Article 17(9)“;

- When a platform matches uploads that are not likely infringing with a work identified by rightholders to be blocked, the platform has to notify the uploader and must give them the ability to contest the infringing nature of the upload. If the uploader contents the infringing nature, the “service providers should submit the upload to human review for a rapid decision as to whether the content should be blocked or be available. Such content should remain online during the human review“.

Taken together, these principles would have constituted a relatively strong set of safeguards against overblocking as a result of the use of automated content recognition. The key element here is the limit of the scope of fully automated blocking to cases of “likely infringing” uploads and to require some form of human intervention in all other cases where use of third party works does not meet the likely infringing threshold. In this aspect, the Commission’s proposal from Summer 2020 echoed the approach that had been proposed by a large group of academics during the initial phases of the stakeholder dialogue in November 2019, which argued for limiting the scope of fully automated filtering to cases of prima facie copyright infringement.

In November 2019, the Commission applied those principles to defend the legality of Article 17 before the CJEU in Case C-401/19 (the Polish government’s challenge to Article 17). Together with the Parliament and the Council, the Commission defended Article 17 against the claim made by the Polish Government that its application would limit users’ fundamental right to freedom of expression and should therefore be annulled.

In its oral intervention before the CJEU, the Commission argued that Article 17 is compliant with fundamental rights because the requirement not to prevent the availability of non-infringing uploads contained in Article 17(7) is an “obligation of result” and, therefore, takes precedence over the “obligation of best effort” to prevent the availability of works identified by right-holders contained in Article 17(4). The Commission further argued that the upcoming guidance would ensure that this hierarchy of obligations would work in practice. To achieve this, national transpositions would need to limit fully automated blocking to “manifestly infringing” uploads only and would be required to contain ex-ante safeguards for all uploads that do not meet the “manifestly infringing” threshold.

Undermining the principles by “earmarking” content that “could cause significant economic harm”

Since the CJEU hearing in November of last year, the Commission has been largely silent on the guidance. The release of the document, which had initially been expected to be issued at the beginning of 2021, was successively postponed. The final version that has been published today shows that, during this period, the Commission’s thinking on the guidance has undergone a significant shift. This shift does not only weaken the relatively strong user rights safeguards that the Commission had originally proposed, it also undermines key aspects of the arguments that the Commission had made in front of the CJEU.

The idea of allowing rightholders to earmark “specific content which is protected by copyright and related rights, the unauthorised online availability of which could cause significant economic harm to them” is a new addition to the Commission’s guidance, which as far as we know was only introduced in the final months of the private discussions of the document at the political level, supposedly to address specific challenges posed by so called “high value content”.

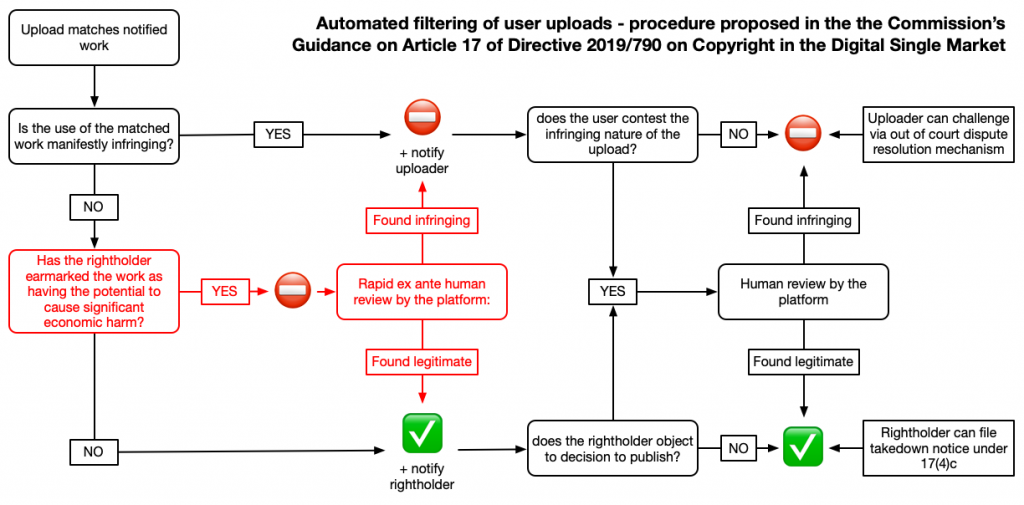

Schematic overview of the mechanism proposed in the guidance, with the new earmarking mechanism highlighted in red.

While the final version of the guidance seemingly preserves the idea that “automated blocking, i.e. preventing the upload by the use of technology, should in principle be limited to manifestly infringing uploads”, it introduces a mechanism that allows rightholders to easily circumvent this principle. They can do so by “earmarking” content as content the “unauthorised online availability of which could cause significant economic harm to them” as part of the process of providing platforms with the “necessary and relevant information” for the application of the upload filtering mechanisms imposed by Articles 17(4)(b) and (c).

The guidance does not establish any qualitative or quantitative requirements or criteria for right-holders to earmark their content. The relevant passage in Section V.2 simply states that they “may choose” to do so (emphasis ours):

When providing the relevant and necessary information to the service providers, rightholders may choose to identify specific content which is protected by copyright and related rights, the unauthorised online availability of which could cause significant economic harm to them. The prior earmarking by rightholders of such content may be a factor to be taken into account when assessing whether online content-sharing service providers have made their best efforts to ensure the unavailability of this specific content and whether they have done so in compliance with the safeguards for legitimate uses under Article 17(7), as explained in part VI below.

The lack of criteria under which rightholders may earmark their content, coupled with undefined concepts like “significant economic harm” that merely need to be possible (“could cause”), effectively allows rightholders to earmark all of their content as content the “unauthorised online availability of which could cause significant economic harm to them”. This creates a loophole so large that the “principle” that automated blocking should be limited to manifestly infringing uploads only, can hardly be considered a principle anymore.

As outlined above, this shift in position seems particularly important in the context of the pending CJEU case, as the European Commission’s defense of the fundamental rights compliance of Article 17(4) was centred on this very “principle”.

The final version of the guidance further states that content that has been earmarked by rightholders must be subject to “particular care and diligence”, when it comes to the application of Article 17(4) in compliance with Article 17(7) and (9). Section VI of the guidance notes that:

In particular, service providers should exercise particular care and diligence in application of their best efforts obligations before uploading content, which could cause significant economic harm to rightholders (see section V.2 above). This may include, when proportionate and where possible, practicable, a rapid ex ante human review by online content-sharing service providers of the uploads containing such earmarked content identified by an automated content recognition tool. This would apply for content, which is particularly time sensitive (e.g. pre-released music or films or highlights of recent broadcasts of sports events) [footnote: Other types of content may also be time-sensitive]. (….) There should be no need for ex ante human review for content, which is no longer time sensitive [footnote: For example, in case of a football match the sensitivity may be a matter of days]. Service providers who decide to include rapid ex ante human review as part of their compliance mechanism should include mechanisms to mitigate the risks of misuse.

These paragraphs exemplify how service providers can comply with the requirement to “exercise particular care and diligence” with regards to earmarked content: service providers may decide to apply a “rapid ex ante human review” (more precisely: service providers may decide to automatically block uploads of earmarked content until a human review process has been concluded, which should happen rapidly). When it is not “proportionate” nor “possible, practicable” to conduct a “rapid ex ante human review process”, service providers are still required to “exercise particular care and diligence”, but the guidance does not offer further examples on how that heightened care could be exercised in those cases.

At first sight, these sections also seem to establish additional criteria for qualifying content that “could cause significant economic harm”. Upon closer inspection these passages merely establish for which types of earmarked content it would be appropriate for service providers to apply “a rapid ex-ante human review”: “particularly time-sensitive” content (while the guidance gives examples of content for which such special treatment might be justifiable, the footnote makes this attempt to narrow down the category rather meaningless).

The subsequent passage of the guidance tries to limit the requirement for service providers to take “heightened care” to “cases of high risks of significant economic harm, which ought to be properly justified by rightholders”. But in doing so it fails to further define what constitutes “high risk”, leaving service providers with the difficult task of conducting an assessment of what constitutes “high risk”, based solely on the justification provided by rightholders :

In order to ensure the right balance between the different fundamental rights at stake, namely the users freedom of expression, the rightholders intellectual property right and the providers right to conduct a business, this heightened care for earmarked content should be limited to cases of high risks of significant economic harm, which ought to be properly justified by rightholders. This mechanism should not lead to a disproportionate burden on service providers nor to a general monitoring obligation.

A last passage makes it clear that service providers are “deemed not to have complied, until proven otherwise, with their best effort obligations” and can “be held liable for copyright infringement” if they disregard “information on earmarked content as mentioned in section V.2 above”:

Online content-sharing service providers should be deemed to have complied, until proven otherwise, with their best efforts obligations under Article 17(4)(b) and (c) in light of Article 17 (7) if they have acted diligently as regards content which is not manifestly infringing following the approach outlined in this guidance, taking into account the relevant information from rightholders. By contrast, they should be deemed not to have complied, until proven otherwise, with their best effort obligations in light of Article 17 (7) and be held liable for copyright infringement if they have made available uploaded content disregarding the information provided by rightholders, including – as regards content that is not manifestly infringing content – the information on earmarked content as mentioned in section V.2 above.

In effect this means that service providers face the risk of losing the liability protections afforded to them by Article 17(4) unless they apply special treatment to all uploads earmarked by rightholders as merely having the potential to “cause significant economic harm”. Under these conditions rational service providers will have to revert to blocking all uploads containing earmarked content at upload. The scenario described in the guidance is therefore identical to an implementation with barely any safeguards: platforms have no other choice but to block every upload that contains parts of a work that rightholders have told them is highly valuable.

Conversely, the ability to earmark content as merely having the potential to “cause significant economic harm” provides rightholders with the ability to require the automated blocking of any uploads containing their content, regardless if these are manifestly infringing or not. In fact, if national lawmakers do not create incentives against the abuse of this system, namely via sanctions and transparency obligations (as the initial guidance proposal foresaw), it seems realistic to assume that rightholders will consider all content for which they provide relevant and necessary information to be economically important and will thus earmark all of the notified content.

By silently adding the mechanism during the extra time of the Article 17(10) process, the Commission has not only completely undermined the principle that the initial guidance was based on, it has also invalidated the position it has taken in the pending CJEU case on the fundamental rights compliance of Article 17.

This reversal is especially stark since the Commission does not provide any justification or rationale why users’ fundamental rights do not apply in situations where rightholders claim that there is the potential for them to suffer significant economic harm. It seems hard to imagine that the CJEU will perceive the version of the guidance published today to provide meaningful protection for users’ rights when it has to determine the compliance of the Directive with fundamental rights. It seems that the Commission is acutely aware of this as well and so it has wisely included the following disclaimer in the introduction section of the guidance:

The judgment of the Court of Justice of the European Union in the case C-401/192 will have implications for the implementation by the Member States of Article 17 and for the guidance. The guidance may need to be reviewed following that judgment.

In the end this may turn out to be the most meaningful sentence in the entire guidance.